Accurate data analysis sets the stage for beautiful, data-driven decisions and, as a result, operational efficiency, higher performance, positive brand image, happy customers and, ultimately, increased revenue. And the cornerstone of it all? The art of handling the sources, flow, and integrity of data. Or, simply put, successful data quality.

Some learn this lesson the hard way though.

“Mayday! Mayday! We’re sinking! We have a major data-leak”

- In 1999 NASA’s $125-million Mars Climate Orbiter was lost due to a failure of spacecraft engineers to convert vital data from English to metric measurements.

- In 2012 JPMorgan Chase suffered a $6 billion loss due to a trading strategy that relied on faulty data.

- In 2013 Tesco made a similar mistake, with data integration issues causing their profits to be overstated by £250 million.

And it appears that history is not the most effective teacher, since the same mistakes continue to echo over and over again.

- In 2021 Zillow revealed that it suffered a loss of approximately $304 million due to a faulty model used to predict the house price — despite the fact that it had thousands of sophisticated statistical models and a huge amount of data, it still suggested that the company bought properties for a price much higher than their selling price.

- In 2020 Public Health England (PHE) underreported almost 16,000 coronavirus cases in England due to the use of an outdated file format (XLS) in Microsoft Excel software.

- In 2018 Amazon’s AI-powered recruitment tool was found to be biased against women, as it was trained on historical data.

And unfortunately, damaged reputation and financial losses are merely the tip of the iceberg when it comes to negative aftermath. As much as proper data gives limitless opportunities for growth, any flaw in the data quality pipeline has the infinite potential for disasters. And should you let errors and mistakes repeat and accumulate, creating holes in your business analysis and decisions, well… if you’ve ever played Jenga, you can picture the outcome.

But why are these poor data quality issues so widespread? Is it possible to find all the roots of those errors and guarantee avoiding the drain on your time, money, and opportunities?

The response from Ivan Zolotov, our data engineer, equipped with 10+ years of expertise, 15+ successfully completed big data projects, many involving AWS Glue, and willingness to unveil the data quality nuances, is short and sweet: absolutely yes! Given the right arsenal of tools, solutions and approach, of course.

Putting together a puzzle: non-obvious components of data quality

What comes to your mind when you think about data quality? Probably, something like “a set of metrics that allow us to understand how much of our data is in an adequate format, and to what extent it may be incorrect, lacking or irrelevant.”

And if you were nodding along this definition, we’ve got some news for you.

The point is that the concept of data quality in data engineering is not so one-dimensional and doesn’t stop at crafting metrics to show, for example, how many empty rows there are in your dataset, or how many data have encoding errors.

“Analyzing the data in a dataset (i.e., data profiling) is just one of the components of data quality and covers only a portion of the data quality-related manipulations. However, when these two concepts are equated with each other, we get a narrow and simplistic perception of data quality, which leads to tons of challenges and mistakes.”

Now that we’ve ruled out what data quality is not, the question remains: what is it exactly then?

The answer lies in the diversity of processes and operations involved, which go far beyond data profiling. Data quality, within data engineering services, includes metadata analysis, assessing how data is retrieved and then kept in the system, setting up monitoring, testing, configuring reporting, and more. So, by combining these operations in a meaningful way, we identify the following components that are also integral to data quality:

1. Data governance

Data governance involves collecting, processing, and establishing standards and protocols for working with data. While algorithms may be part of this process, it is often more important to establish rules that will be used by the people involved.

One key aspect of data governance is also the creation of a unified, centralized data catalog that contains details (i.e., metadata) about all the data used in the company. Such a single point of data management significantly eases work and allows for quick responses to changes and requests.

Therefore, data governance helps ensure full compliance with GDPR (General Data Protection Regulation) in terms of data access, with a set of rules to prevent, for example, data engineers from accessing private sets of information.

“Data protection in this case does not refer to encrypting it with a password. Here we are talking about the fact that if a person does not have access to certain data, it is as if the data does not exist for them. They will not know that there is any data at all.”

2. Data lineage

Data lineage is the history of the data, which provides a complete record of the origin, movement, and transformations of data, enabling you to trace it from its source to its destination, and to understand how it has been changed and by whom.

One of the most advantageous aspects of data lineage is its ability to visualize the data flow across various systems and procedures. This valuable feature empowers data scientists to pinpoint possible bottlenecks, inaccuracies, and to ensure overall transparency and trustworthiness of data.

Hunting for perfect data

Before we delve into the details of effective data quality tools and techniques, let’s identify the gems amidst the rubble and differentiate between low-quality and high-quality data that we will refine into precious insights.

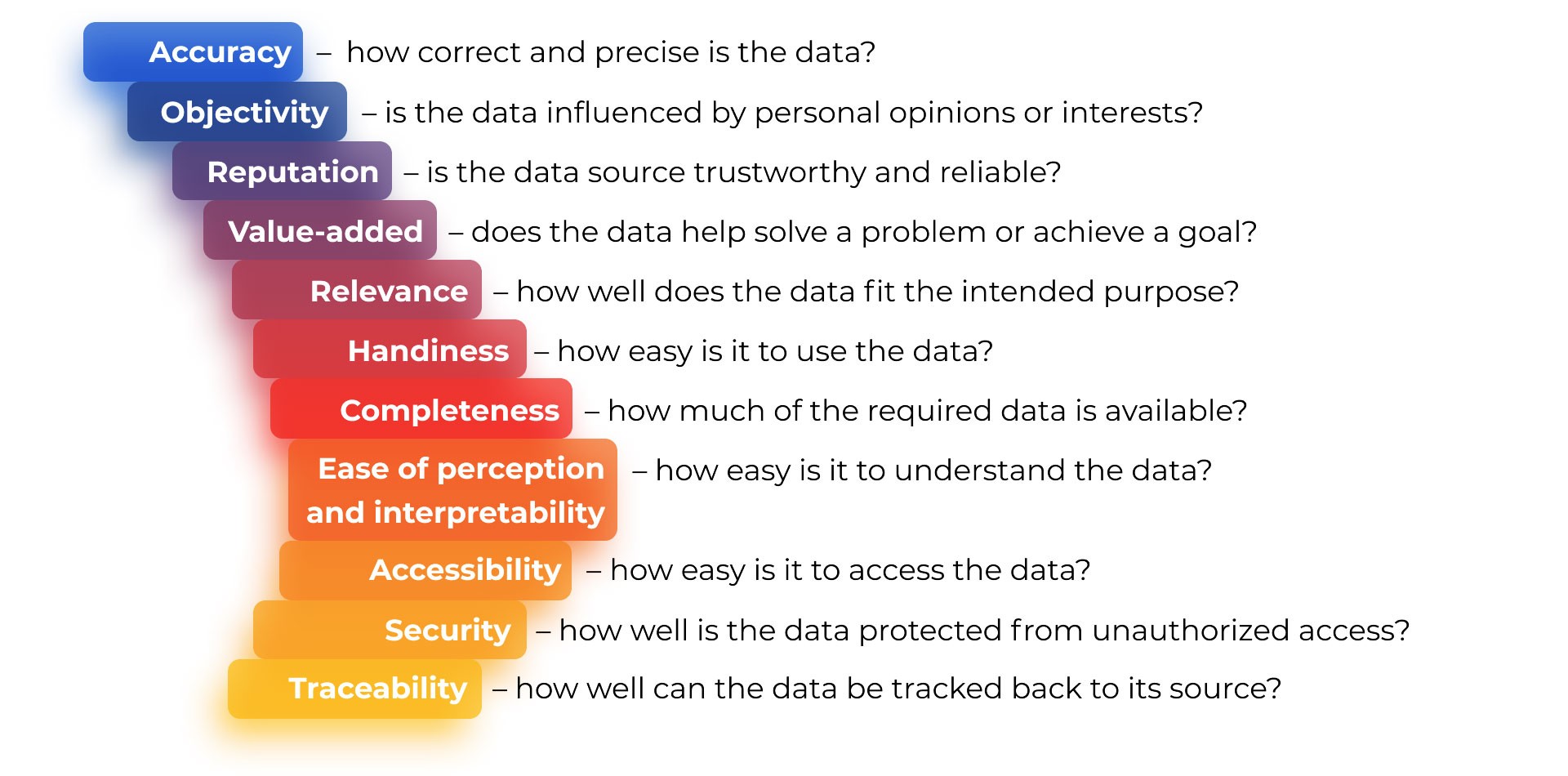

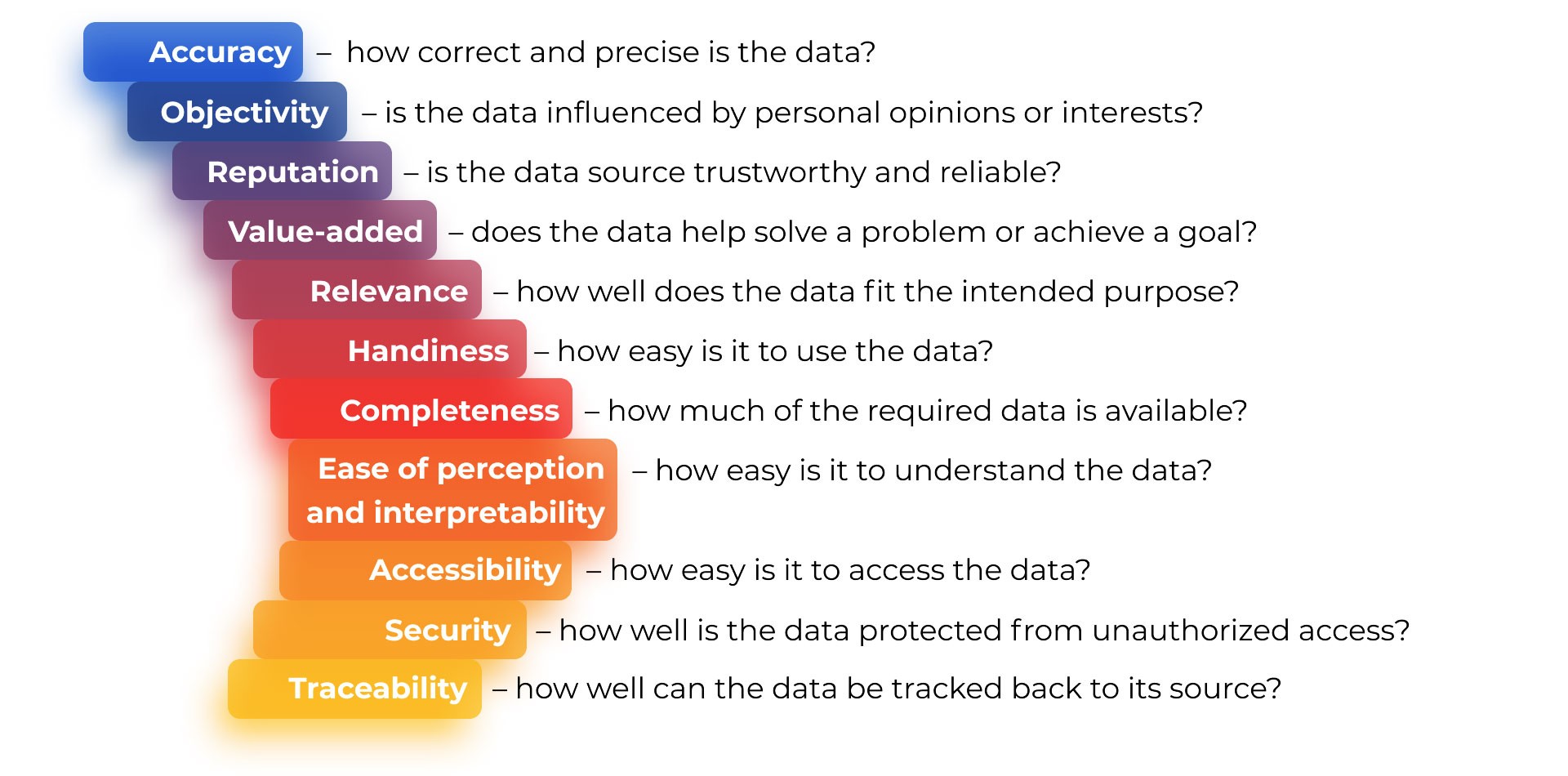

The good news is that we are not left to the mercy of fate here, as there are already 11 specific assesing criteria that ensure the right outcome.

“Even if you have the most advanced AI and data engineers in the world, not everything can be evaluated by them alone, especially when dealing with intricate subject matter. For instance, a data engineer might not be able to determine the accuracy of “average protein content in blood” without the input of a domain expert. Therefore, it’s crucial to collaborate with experts in the relevant field.”

A template of an efficient data quality architecture

Fantastic, we’ve figured out what ideal, everybody-dreams-of data should look like. But what’s the plan now? How can we ensure that it’s handled correctly, particularly in industries where even minor mistakes can lead to massive expenses or even physical harm?

Let’s shift our focus from the theoretical aspect to the practical implementation and examine how a perfect data quality process functions.

Step 1 — Data discovery

An initial step in the data analysis process, which involves viewing data as an abstract set of information, understanding its characteristics, and deciding how to organize it for further analysis. At this stage data analysts gather requirements, evaluate data sources depending on the subject area and decide on how the data will be stored (in a data lake or some relational databases).

Step 2 — Data profiling

As previously mentioned, it’s common to confuse this step with the whole concept of data quality in data engineering. However, we’re only on the second step of the process.

Essentially, data profiling involves taking a representative sample of your available data, analyzing it, and using the results to establish rules for evaluating the entire dataset. This can be done by a domain expert together with a data engineer, or through the use of smart algorithms.

Step 3 — Data rules

Based on the results obtained during data profiling, you get sets of criteria (data rules) which are then used automatically or manually to assess the data.

Step 4 — Data distribution and remediation

Depending on whether or not the data set meets the rules, you either remediate the data or not.

Step 5 — Data monitoring

This step is all about continuous checking of the data to ensure that it remains accurate and consistent on each data pipeline segment.

“This isn’t a “set it and forget it” situation. Think of this process as of a continuous cycle of monitoring and refinement, ensuring that both new and old data are under the microscope for any potential problems.”

The heroes safeguarding the data quality

Even with the impressive abilities of AI and machine algorithms, ensuring that the entire process runs smoothly still requires some good old human oversight. So behind every successful data analysis process, there’s a team of dedicated individuals relentlessly protecting data accuracy.

Overall business owner — a person accountable for the whole project. His responsibilities include maintaining and managing data accuracy, completeness, and suitability for the organization’s requirements. The business owner is tasked with developing and implementing data quality procedures and standards to guarantee that it satisfies the organization’s demands.

Data steward — a person in charge of a particular one or multiple steps of a data pipeline, who makes sure that the data that will pass to the next step is free of any issues and is consistent.

Scrubbing owner — a person responsible for the general process of “cleansing the data”. He locates missing cells, edits and corrects the data, ensuring it is consistent, without any typos, wrong naming, and other structural errors. He removes damaged or irrelevant data, and sometimes formats it in a language that is optimal for computer analysis.

How we can help

Do you want to discover the secret potential of your data? Our team of experts will uncover patterns, detect anomalies, and turn raw information into a strategic asset.

The magic wands to streamline data quality tasks

While having a team of experts who know the ins and outs of data management is essential, equipping them with the right set of tools that they can benefit from to maintain perfect data pipeline is equally important. And that’s where the following instruments come to the forefront.

AWS Glue

While AWS Glue is a fully managed ETL (extract, transform, load) service provided by Amazon Web Services (AWS), it has one feature that is of particular interest to us — Data Quality, which greatly simplifies the process by automating it. It can be in charge of profiling, creating quality rules, computing statistics, monitoring the current state of data pipeline, and alerting you in case it notices that the quality has become worse.

Data Quality Definition Language

It’s a standardized language used to define data quality rules and metrics that can be used to measure and monitor data quality.

Deequ

It is an open-source library for data quality assessment based on Apache Spark. It provides various metrics and tools to evaluate the quality of data, such as data completeness, consistency, and accuracy. It also supports customizable data quality rules and allows for automation of data quality checks. It does about the same thing as Glue, only if you don’t work with Amazon software.

Lake formation

This is a technology that enables the development of data access policies within a data lake using a high-level set of rules. We abstract from the data format on disk and specify a set of rules from the top to ensure that people, such as engineers, do not have access to private data in accordance with GDPR.

Lake formation allows restrictions not only on individual tables but also on columns within those tables. If we have anonymized data and personal data in one file, we can restrict access only to personal columns.

The pillars of data success

So, ladies and gentlemen, we’ve nailed it! We have constructed a great data quality pyramid strengthened by experienced data engineers, latest technologies, and quality standards — a recipe for success, wealth, and everything that’s hip.

There is only one more detail left — a solid foundation. The underlying principles that are the backbone of all the data quality operations in our team and the key to our consistent success.

Establishing a formalized set of rules

Without a defined, consistent set of rules used by all teams for evaluating the suitability and quality of data, there is a risk of confusion, creating duplicate data, or even storing it in different formats. So with the help of the rules standardization and properly set communication between everyone involved in the process, you can ensure that everyone is working towards the same goals and that the right data is evaluated effectively.

Setting a monitoring and reporting system

Having a monitoring and reporting system in place to alert you of any data quality issues is crucial. Even a simple email alert can do the trick. If you want to learn more about reporting systems, don’t hesitate to reach out to us.

Following the data governance guidelines

Needless to say that making sure there are no breaches or unlawful access to private, confidential information is crucial. Any failure to do so may result in some serious financial, reputational harm, and even legal liabilities.

On a practical level, we use an array of methods designed to make data quality pipeline function seamlessly and maximize benefits for all departments, which goes beyond these three major principles. If you’re eager to explore further, find out what strategies can be implemented for your particular case — our Big Data team is always willing to share our advice and offer assistance, so that unreliable data never jeopardizes your business revenue strategies.